Zhiwei Li

zli404 [AT] connect.hkust-gz.edu.cn

The Great Wall at Badaling, 2024.

Hello, I’m Zhiwei Li (李志伟). I’m currently pursuing my Ph.D at the Hong Kong University of Science and Technology (Guangzhou), supervised by Prof. Zhijiang Guo. I previously completed my M.Sc. in School of Data Science at Fudan University, where I was advised by Prof. Weizhong Zhang. Before that, I earned my B.Sc. in School of Statistics and Data Science from Shanghai University of Finance and Economics in Summer 2023.

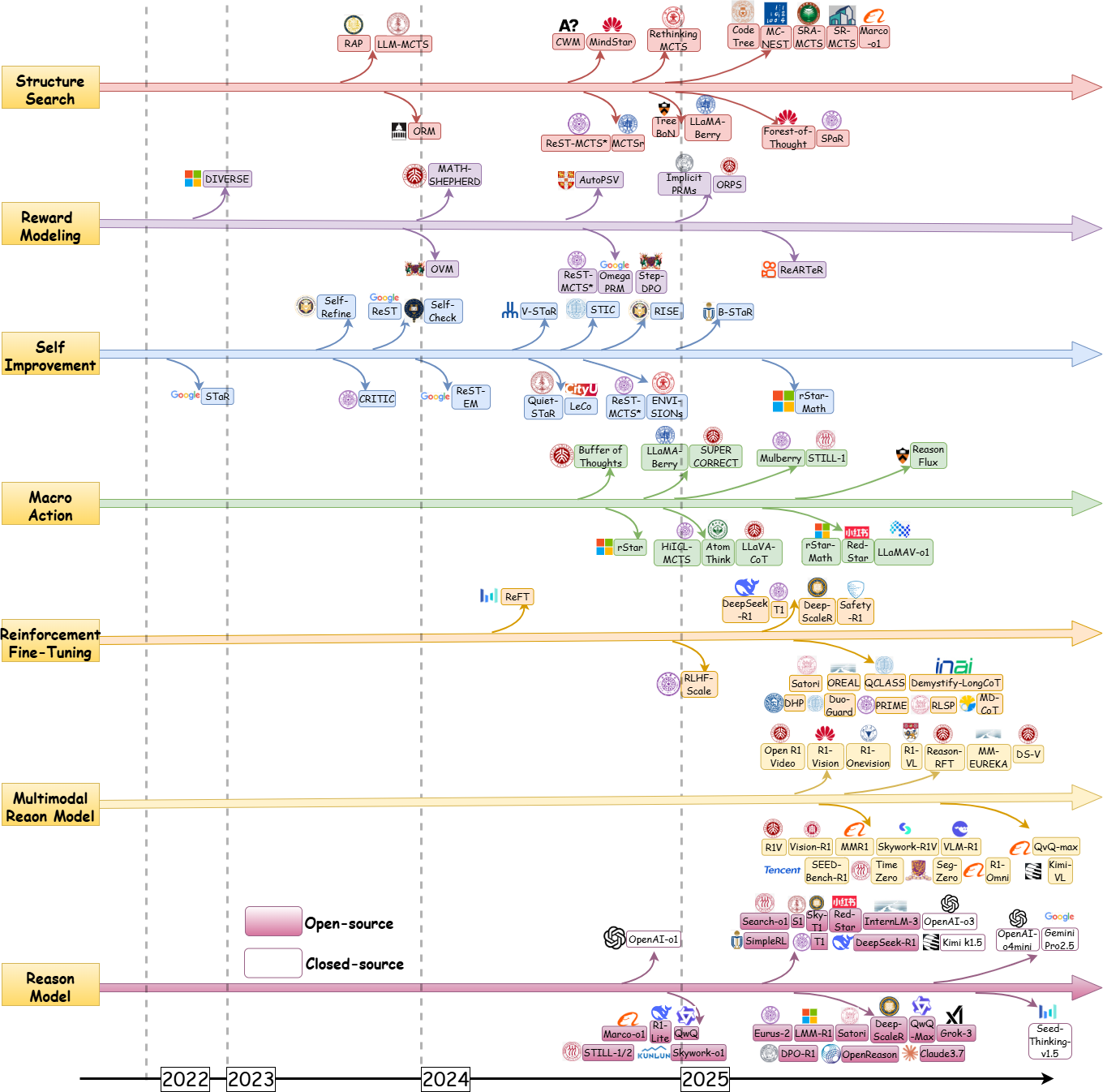

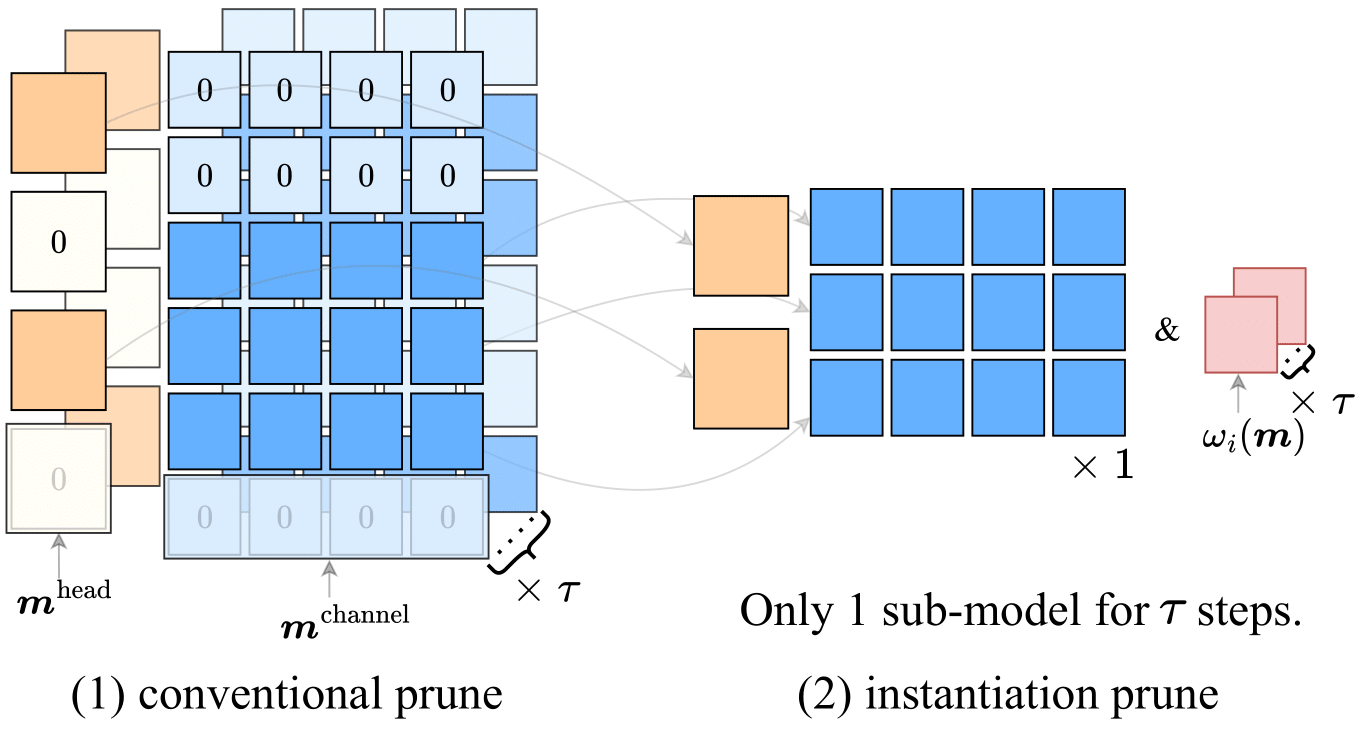

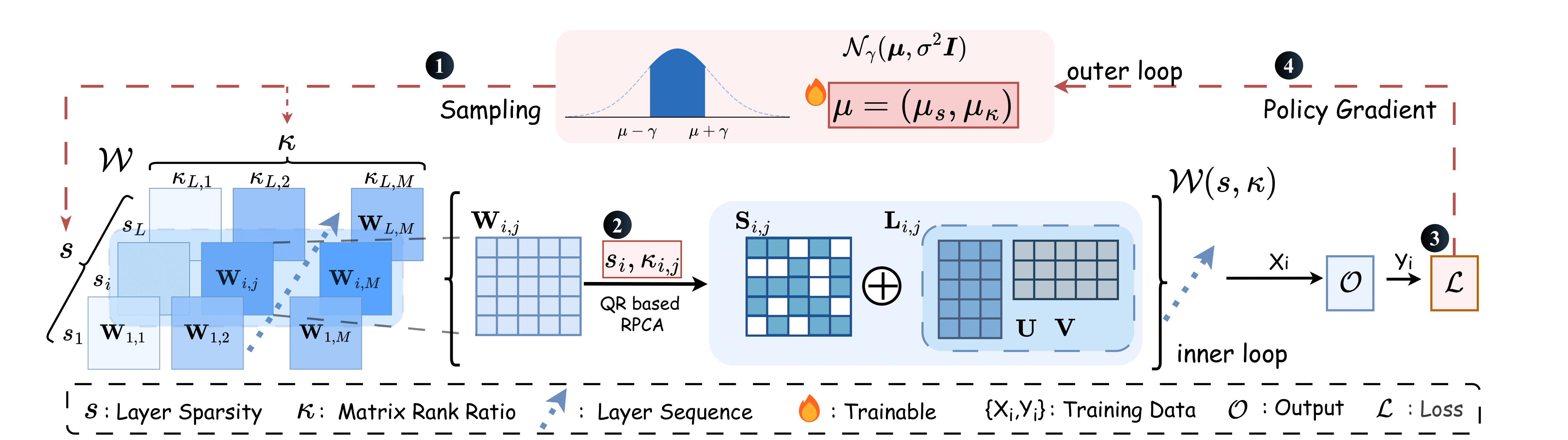

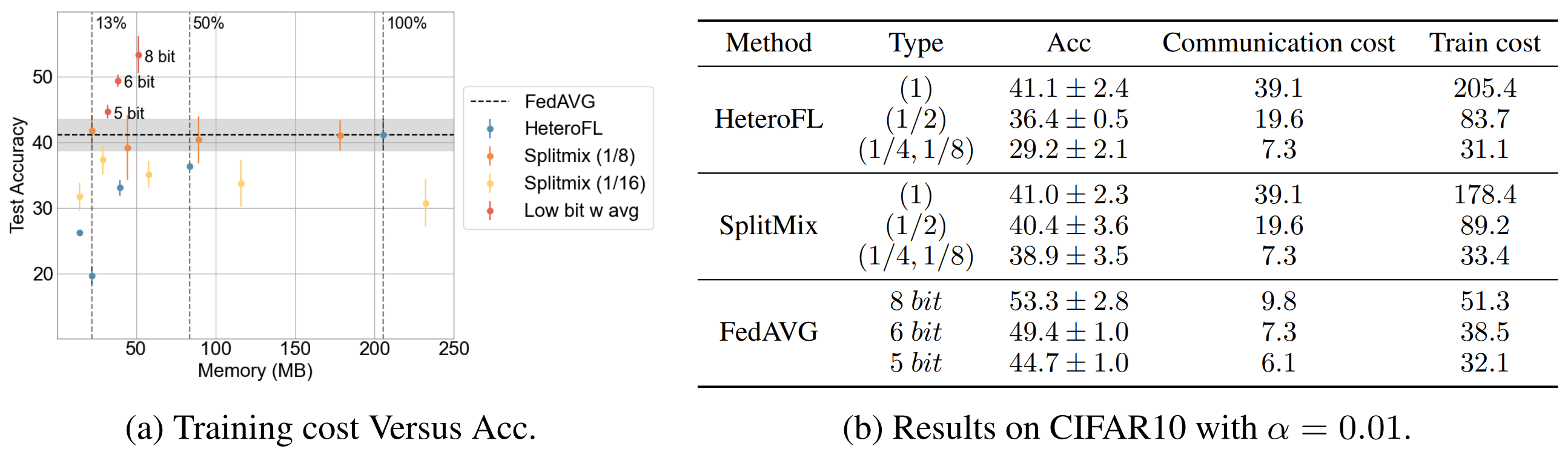

My current research is centered on Reasoning of Large Language Models, especially in multimodal LLMs, reinforcement learning, and multi-turn agents. I previously conducted research on model compression and acceleration, including LLM pruning, low-precision training, and diffusion model accelerated sampling.

For a complete overview of my research and experience, please refer to my resume: CV.

news

| Sep 19, 2025 | Three papers are accepted by NeurIPS 2025! |

|---|---|

| Aug 23, 2025 | New journey begins at HKUST(GZ)! |

| Sep 27, 2024 | One paper is accepted by NeurIPS 2024! |